Those entrenched in the saga of new therapeutic discovery would likely openly attest to their own experiences ensuring proper due diligence when pushing therapeutic candidates along their regulatory path that could one day better the quality of life for those who are ill. In this journey, the accurate quantification of biological analytes is vital to biomedical research and drug development pipelines, whether for proteins, nucleic acids, or other -omic targets, in deciphering mode of action, drug efficacy & safety, or patient stratification strategies, to name a few core applications.

Technological advances in downstream analytical assessment have, no doubt, continued to help researchers within this space; the reliability and throughput that comes with immunoassays, NMR or combined/sequential MS, and iterations of NGS or PCR-based techniques are cornerstone and, in most cases, complementary and orthogonal technologies trusted by labs. Similarly, the range of complex sample types available for assessment, including non-invasive collection of excreta or saliva, serum or plasma from minimally-invasive blood draws, or methodical tissues biopsies, add value in generating meaningful results that could translate to actionable decision for biomarkers, ADMET, and precision medicine.

The challenges that impact the efficient use of any biological specimen, accentuated even more so for solid or tough tissues, are plenty:

- Ensuring integrity of samples during collection, preservation, and storage from time of harvest

- Obtaining consistency and reproducibility of results contingent on standardization of process, sectioning, and homogenization that easily scales

- Maintaining rigorous quality measures and documentation for traceability of operational procedures and real-time tracking

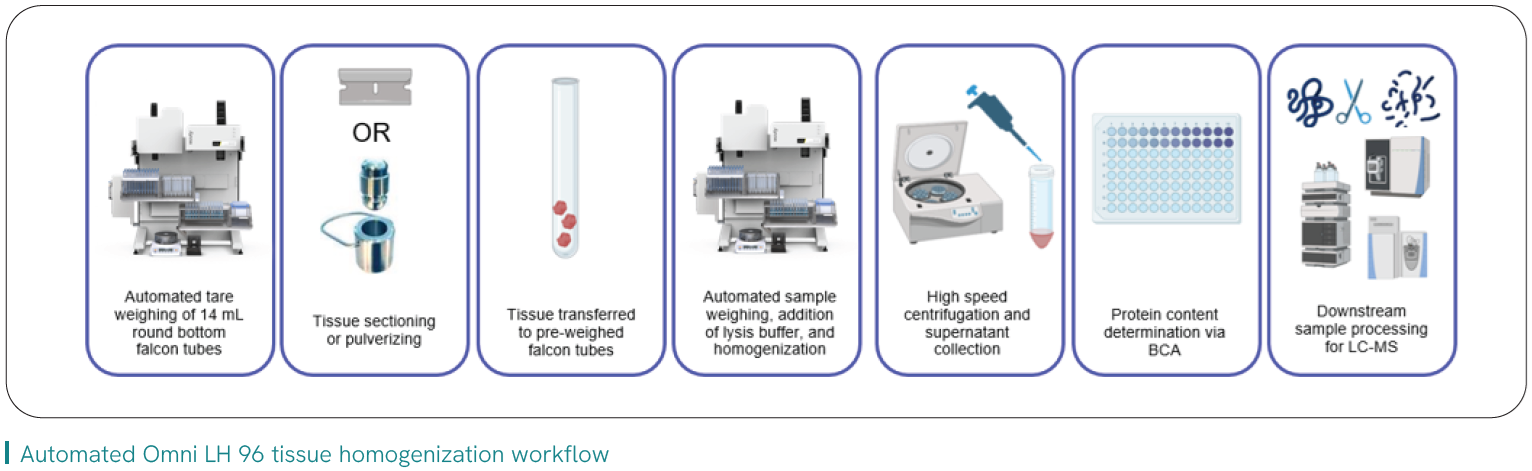

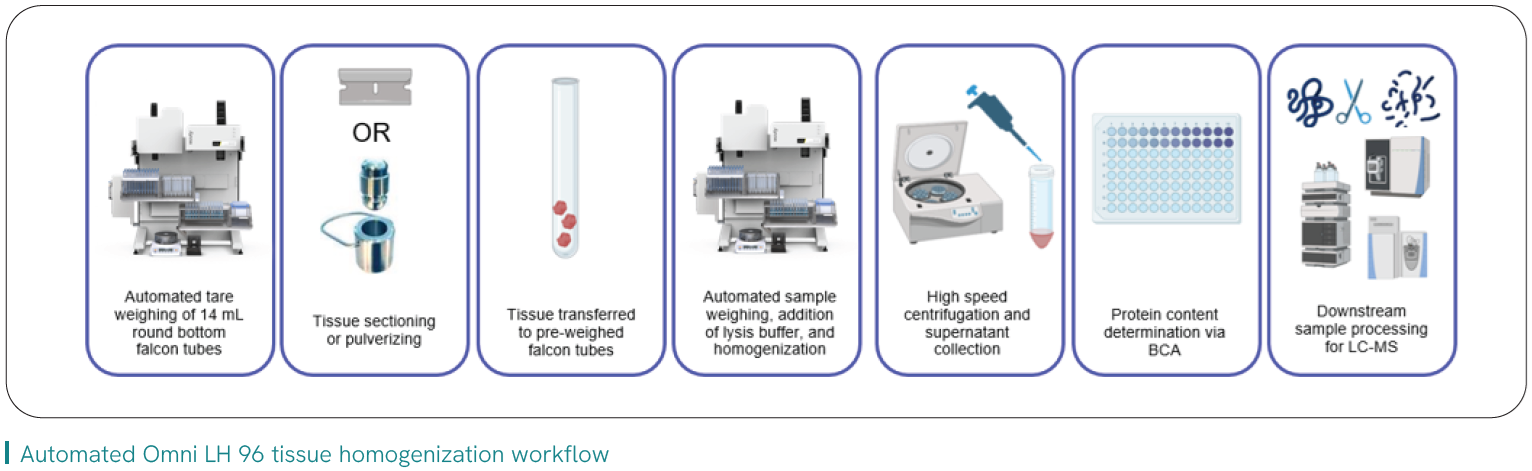

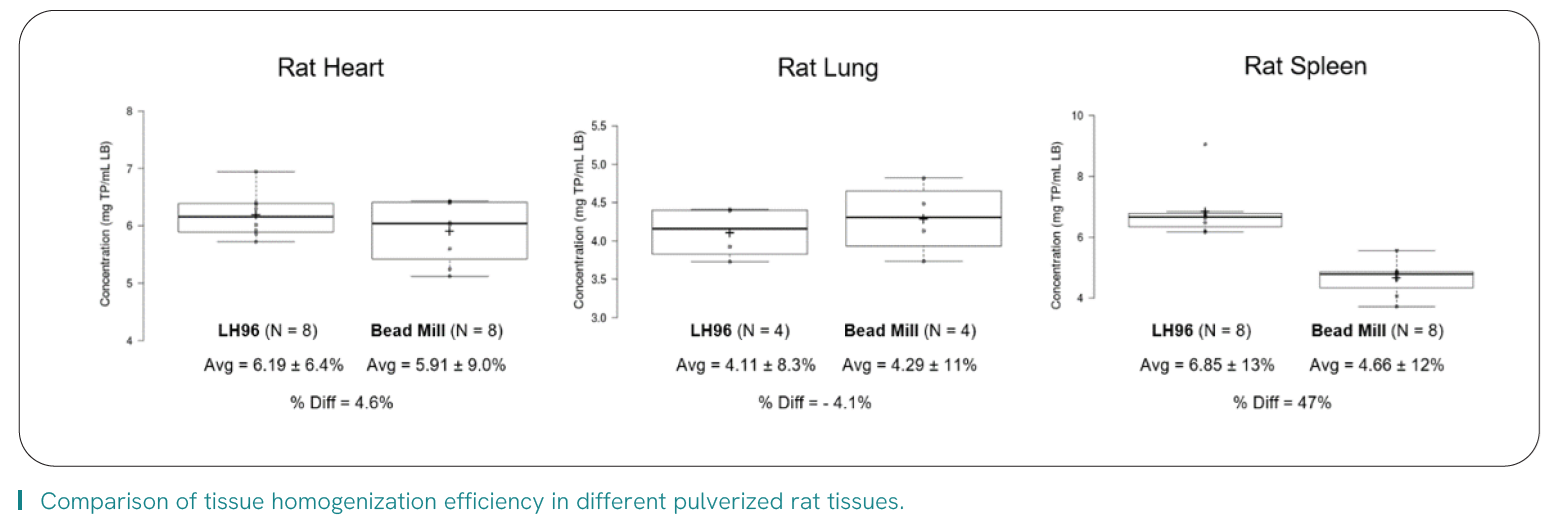

Variability of sample prep, particularly as it pertains to upstream tissue dissection and homogenization, has been identified as a key component for downstream robustness of technologies such as LC-MS1 and NGS2 when generating quality data and study results. It also directly impacts core challenges faced by labs within the continuum of tissue prep.

Labs are keen to leverage automation to address operational bench bottlenecks and standardize protocols, and this includes automated homogenization that can offer measurable benefits:

- Increase operational efficiency and throughput, by impacting prep time, repetitive labor

- Consistency and analytical reproducibility by minimizing potential human errors, including risk of contamination

- Enhancing sample integrity by minimizing touchpoints that could lead to unintended human errors associated with risk of contamination or excess degradation of temperature-sensitive analytes

Read the application note about Pfizer’s path to implementing automated tissue homogenization into their workflow that increased their throughput by > 40%, expanded their working range of tissue size/weight, and freed hours of direct analyst time.

Research use only. Not for use in diagnostic procedures.

References

- Piehowski, P. D., Petyuk, V. A., Orton, D. J., Xie, F., Moore, R. J., Ramirez-Restrepo, M., Engel, A., Lieberman, A. P., Albin, R. L., Camp, D. G., Smith, R. D., & Myers, A. J. (2013). Sources of technical variability in quantitative LC-MS proteomics: human brain tissue sample analysis. Journal of proteome research, 12(5), 2128–2137.

- Nieuwenhuis, T. O., Giles, H. H., Arking, J. V. A., Patil, A. H., Shi, W., McCall, M. N., & Halushka, M. K. (2024). Patterns of Unwanted Biological and Technical Expression Variation Among 49 Human Tissues. Laboratory investigation; a journal of technical methods and pathology, 104(6), 102069.